MetaVIn: Meteorological-Visual Integration

Overview

MetaVIn is a groundbreaking deep learning framework designed to mitigate atmospheric turbulence in long-range imaging by integrating meteorological data with visual information. Presented at WACV 2025 (Paper ID #724), this innovative approach addresses the challenges of atmospheric turbulence, offering accessible and accurate turbulence strength estimation crucial for applications such as remote sensing, UAV surveillance, and astrophotography.

Challenges

Atmospheric turbulence introduces blur and geometric distortions in long-range images due to variations in refractive indices along the light path. The severity of these effects is quantified using the refractive index structure parameter (Cn2). Traditional methods for estimating Cn2 often rely on expensive and bulky optical equipment, such as scintillometers or wavefront sensors, which limits their widespread adoption. MetaVIn tackles several key challenges, including handling image degradation caused by large propagation distances, extreme weather conditions, and camera vibrations. It also overcomes the limitations of video-based approaches that fail under real-world conditions with motion, and effectively fuses meteorological data with image sharpness metrics to capture path-averaged turbulence using only single-frame inputs.

Methodology

MetaVIn was trained and evaluated on a large dataset comprising 35,364 samples collected across multiple geographic locations. Each sample includes ground-truth Cn2 values obtained from a large-aperture scintillometer, meteorological data such as temperature, wind speed, humidity, and solar loading, single-frame images extracted from videos, and distance measurements from a cost-effective laser rangefinder. The dataset spans diverse weather conditions, with temperatures ranging from -5.3°C to 32.8°C and wind speeds reaching up to 19 m/s. To ensure robust evaluation, training was performed on designated subsets (BRS datasets), while testing was conducted on other subsets (BTS datasets), with data imputation techniques employed to address occasional sensor gaps.

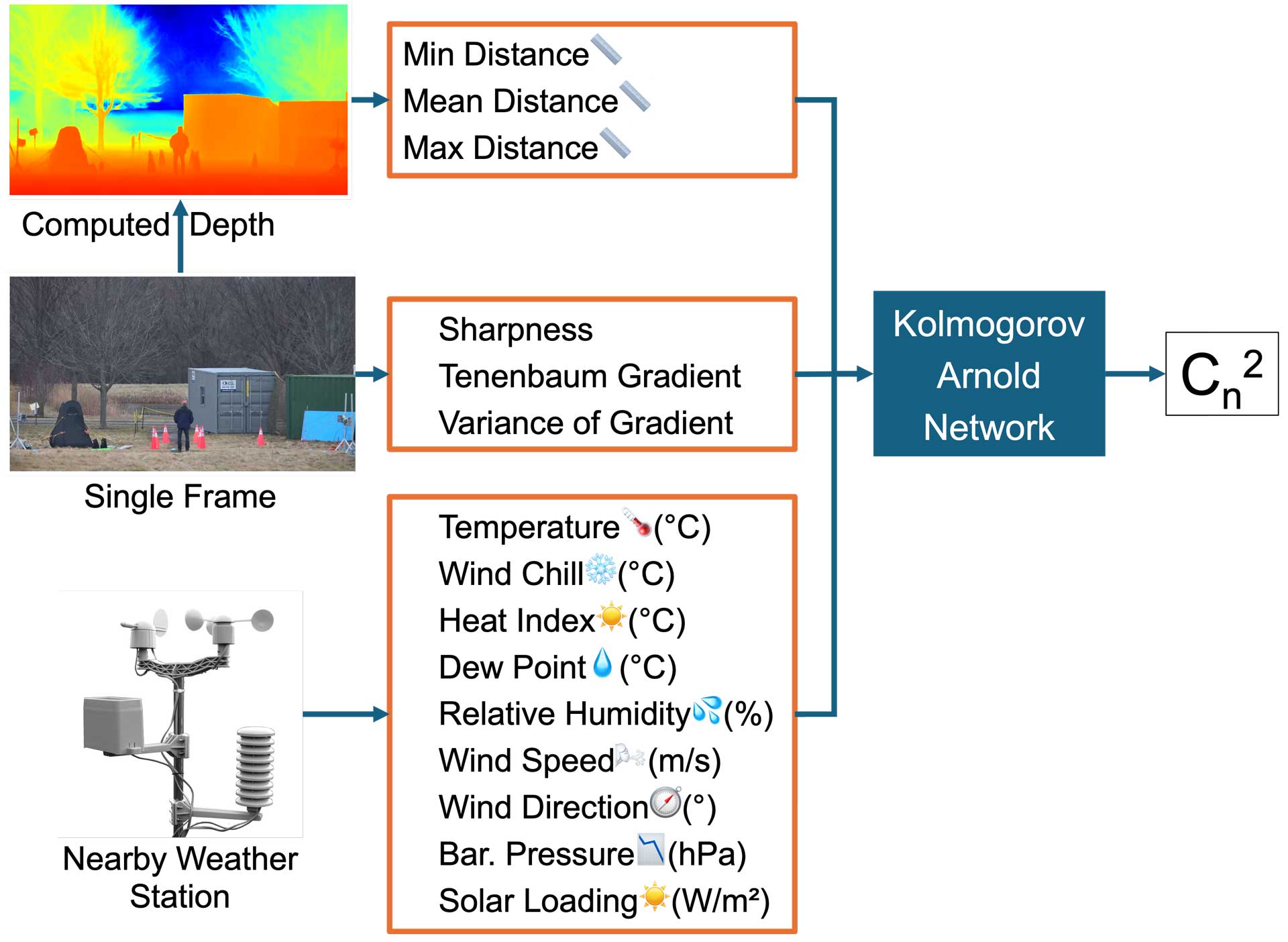

At the core of MetaVIn is its Kolmogorov Arnold Network (KAN) architecture, which fuses meteorological data, image sharpness metrics, and distance measurements to accurately predict Cn2. The framework extracts visual features such as the sum of the Laplacian, Tenengrad, and variance of gradients from single frames to quantify blur, while simultaneously encoding weather station data and solar loading into compact representations. The KAN employs learnable univariate activation functions to capture the nonlinear relationships between environmental conditions, image quality, and turbulence strength. With a few hidden units and a high learning rate, it efficiently predicts log-scaled Cn2, without the need for massive training sets or complex computing hardware.

A key innovation in MetaVIn is its fusion mechanism that integrates visual features with meteorological signals, enabling the system to dynamically adjust its estimation strategy based on the combined inputs. This synergy ensures robust predictions across varying conditions, leveraging both image clarity and atmospheric information.

Results and Evaluation

MetaVIn demonstrated superior performance compared to traditional techniques and other deep learning approaches. It achieved a Spearman correlation of 0.943 between predicted and ground-truth Cn2 values, along with a low mean absolute error (MAE) on log-scaled predictions. The framework proved robust against vibrations, moving objects, and variable weather conditions. Compared to classical image quality assessment metrics, such as BRISQUE or NIQE, and deep CNN models like EfficientNetV2, MetaVIn significantly outperformed them in both accuracy and reliability. Moreover, SHAP analysis underscored the importance of the combined meteorological, visual, and distance features in achieving precise turbulence estimation.

Impact and Future Work

The development of MetaVIn has significant implications for fields including surveillance, remote sensing, astrophotography, and autonomous systems, by enabling clearer long-range imaging under challenging atmospheric conditions. However, the current implementation has limitations: it relies solely on single-frame metrics without incorporating spatiotemporal analysis for continuous Cn2 tracking, and it depends on co-located weather stations along with a single laser rangefinder measurement. Future work will aim to integrate spatiotemporal features to enable continuous turbulence tracking, optimize real-time GPU pipelines for faster processing, and employ transfer learning techniques to adapt the framework across diverse climates. Through these improvements, MetaVIn is poised to push the boundaries of meteorological and visual data fusion, setting a new standard for robust long-range imaging in real-world applications.

For more information about MetaVIn, visit https://metavin.rksaha.com.